Junior's Journey - Senior Project

-----

What is Junior's Journey?

An interactive animation about a lost baby dinosaur, Junior. Help Junior find his way home by deciding which path he takes throughout the story. You’ll meet lots of friendly and approachable characters who are trying to help Junior along the way!

Myself and three other Interactive Multimedia and Design students worked on Junior’s Journey from April 2017 to April 2018 for our undergraduate senior project at Carleton University.My role in this project:

Lead Programmer and User Interface/Experience Designer

Target Audience:

Children ages 6-12 years old.

User Experience:

In order to help Junior find his way home the user gets to vote for which path Junior takes. The user is presented with 2 options every time they arrive at a decision point.

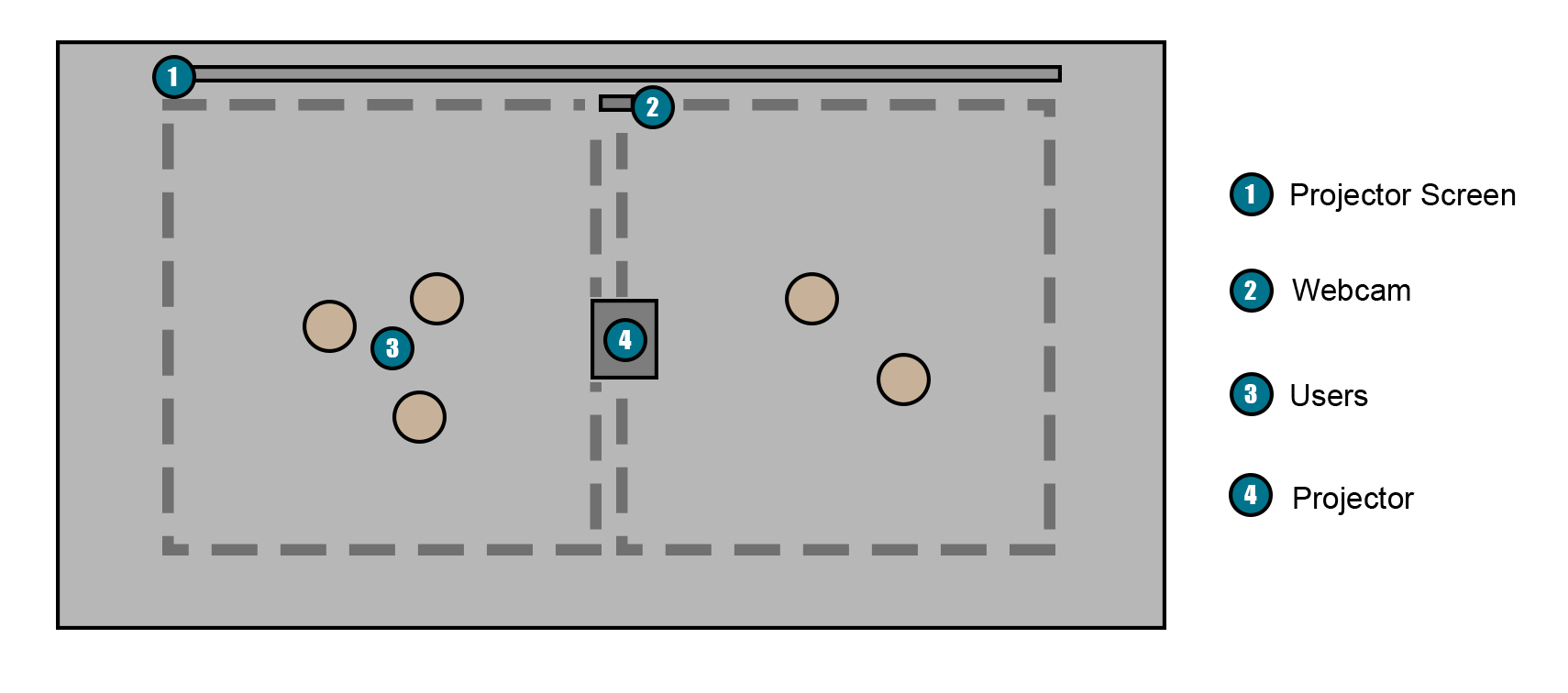

The input method for this interactive environment is the users body. The user can move to the right or the left to decide which path they would like to follow. Using webcam tracking, the application is able to determine which side of the room has more votes (# of people).

In order to assist with instructions on how to interact, the use of an agent was incorporated into the voting process. This agent points to the left and right in accordance to the voice over explaining the results of each direction. Considering our target audience is ages 6-12 this was intended to help the younger audience make a decision more efficiently if they can hear what they are supposed to be doing instead of solely relying on reading the prompts on the screen.

A visual indicator is presented on screen to know which option is receiving more votes. This is done through a progress bar, the more people on a given side, the more the progress bar increases. Whichever bar is the largest is the path that wins.

Interaction Flowchart

Room Layout

User Testing:

We wanted to determine if our input method was suitable for the intended audience. We recruited 16 testing participants. At this point, the webcam tracking was not set up, so we tested with the Wizard of Oz testing method. The testing participants still used their bodies as an input method but the votes were controlled from the laptop.

We observed their interactions to see if they understood how to vote. There was a general understanding of how it worked, but it was obvious that the addition of an agent would help with visual cues, as well as an indicator of which vote was winning.

After the testing we performed focus group questions and a questionnaire to get a deeper understanding of the user’s experience. The focus group questions gave the testing team a general understanding of what they thought of the story, if they had fun, if they would watch again, etc.

The questionnaire was more specific, asking questions about the interactivity and character development. These questions helped us to find out how we needed to improve the story, and the interaction method.

We used all feedback to make any changes needed. Overall, participants enjoyed the experience, but wanted more time to vote. Increasing the voting time, as well as adding an agent to the voting screen helped with improving the user’s experience. As you can see below the final voting interface included the agent, a timer, and a voice over explaining how to cast your vote:

Programming:

The application was developed in OpenFrameworks using OpenCV for webcam tracking. The language used was C++. When the application runs, the webcam turns on and checks for a “background”. This background is then used to determine if there is any activity happening in front of the camera. Each time a scene ends and the user is presented with the opportunity to choose a path for the story the webcam starts to track blobs and movement. Whichever side of the room has the largest amount of blobs, is considered the winner, and advances the movie.

It was important to understand all possible paths that the animation could take and having them mapped out when developing this application. Ensuring that the code was organized into multiple header and source files allowed for the main source file to be as straight forward as possible.

Moving forward, the application could be refined even more to detect each participants figure, and use that data throughout the entire experience.